Essay 11.1 Studying Brain Areas for Language Processing

How the brain understands speech and language is one of the oldest and most exciting questions in psychology. Two of the most important early observations of cortical functions related to speech were made by surgeon Pierre Paul Broca and neurologist Carl Wernicke in the nineteenth century.

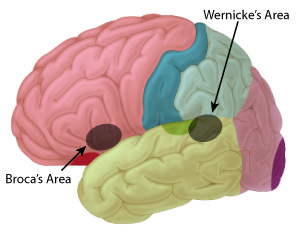

Broca conducted an autopsy on a stroke patient who seemed to be able to understand everything that people said to him, yet could not speak more than a few syllables. Broca found a lesion as big as a chicken egg at the third convolution of the frontal lobe on the left side of the brain (see image below). This area in the left frontal cortex became known as Broca’s area, and the syndrome resulting from damage to this area—difficulty in producing speech but retained ability to understand spoken language—has been termed expressive aphasia.

Approximate locations of Broca’s and Wernicke’s areas of the human cerebral cortex.

About a decade later, Wernicke reported that damage to the posterior superior left temporal lobe (see image) resulted in a complementary disorder. Patients with damage to Wernicke’s area suffered from receptive aphasia—difficulty comprehending language but with a retained ability to produce relatively fluent, but meaningless, speech. (As an example, when asked “What brings you to the hospital?” one patient with receptive aphasia responded, in part, “...a month ago, quite a little, I’ve done a lot well, I impose a lot, while, on the other hand, you know what I mean, I have to run around, look it over...”).

Wernicke’s area is located just behind the auditory cortex, leading Wernicke to suggest that this area of the brain is responsible for decoding the meaning of perceived speech sounds. Broca’s area, which is closer to the motor cortex, was presumed to be responsible for going the other direction—converting ideas into spoken words. Thus, an intact Broca’s area along with a damaged Wernicke’s area results in fluent production but impaired perception, while an intact Wernicke’s with a damaged Broca’s leads to unimpaired perception but disfluent production.

There is also a connection between Broca’s and Wernicke’s areas, called the arcuate fasciculus, and a severance of this connection leads to a third condition, conduction aphasia, marked by retained ability to comprehend language (Wernicke’s area is still functioning) and produce spontaneous speech (Broca’s area is still functioning), but an inability to repeat sentences that the patient has heard (Wernicke’s area can’t send its output to Broca’s area). This type of aphasia was actually predicted by Wernicke before the arcuate fasciculus was discovered.

These findings were very exciting, and they influenced thinking about speech and language for over a century. However, Broca’s and Wernicke’s theories are not entirely correct, in large part because it is extremely difficult to draw strong conclusions about brain processes from brain injuries alone. Brain damage from a stroke follows patterns of blood vessels, not brain function, so damage might cover just part of a particular brain function and leave some functioning undamaged. Or, brain damage could cover a wider region including all of some brain function and parts of others.

There is another difficulty with using brain lesions as evidence for brain organization. Sometimes a lesion can disrupt a pathway between two brain areas that are important for some psychological function (e.g., arcuate fasciculus and conduction aphasia). When that function breaks down following a stroke, we can mistake the pathway between functions as being the actual location of the function. For these reasons and others, it became increasingly apparent that precisely defining either Broca’s or Wernicke’s areas is very difficult, and modern researchers are very careful when describing the relationship between anatomy and function.

Besides the general difficulties in determining regions of brain function on the basis of brain damage, there are some particular challenges for understanding where in the brain speech and language are processed. It is especially hard to disentangle speech perception from the higher cognitive processes of language comprehension. For example, we can conduct an experiment using sequences of speech sounds that are not words, thinking wrongly that we have avoided language per se. For example, can we assume that when listeners hear pseudowords such as “tig” and “gup,” they are not using higher language processes that they would use if the sounds were real words like “pig” and “cup”?

It also is very difficult to separate speech perception from perception of other types of complex sounds. For example, we might try to distinguish processing of speech by comparing it to nonspeech that has similar complexity without being speech. This turns out to be very hard to do. First, because languages use such a wide variety of sounds, it is nearly impossible to construct a complex sound that does not have acoustic features in common with any of the 850 different speech sounds used by languages. Second, as we learned earlier, listeners can understand speech that has been very degraded by filtering or other methods. Many complex “nonspeech” sounds can be heard as speech if listeners try hard enough.

These last two problems extend beyond the neuropsychological study of patients who have suffered brain damage. They are also problems for experiments that use modern methods to localize cortical processing such as electroencephalography (EEG), magnetoencephalography (MEG), positron emission tomography (PET), and functional magnetic resonance imaging (fMRI). However, these four methods have their own limitations. EEG and MEG measures of neural activity are very good at recording the precise timing of brain activities, but they are not very good at spatially localizing where the activity came from. Imaging methods that measure changes in cerebral blood flow or oxygen use (PET, fMRI), are much better at telling us exactly where the brain is active, but these methods are quite slow compared to the speed of auditory perception. Because of these limitations, most conclusions regarding cortical processing of speech remain somewhat tentative. Despite this, we still have been able to learn a lot about how and where the brain processes speech.